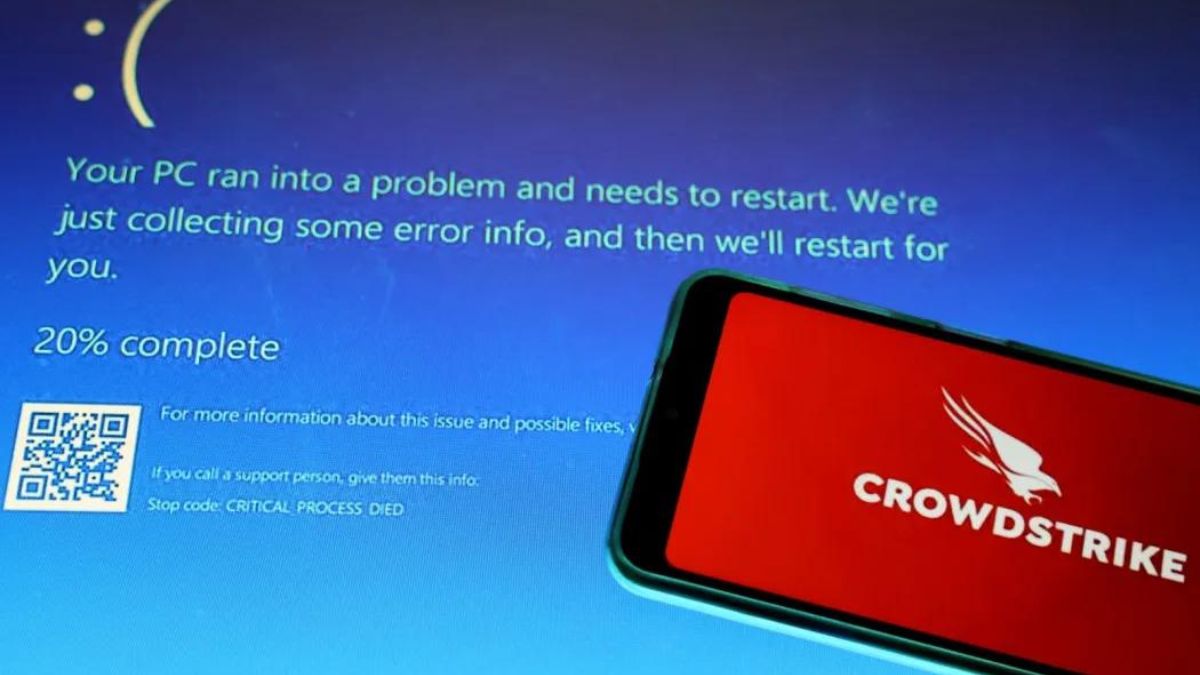

When computer screens turned blue worldwide on Friday, flights were grounded, hotel check-ins halted, and freight deliveries stalled. Businesses reverted to using paper and pen, initially suspecting a cyberterrorist attack. The actual cause, however, was a mundane yet impactful botched software update from cybersecurity firm CrowdStrike.

Understanding the CrowdStrike Outage

“In this case, it was a content update,” explained Nick Hyatt, Director of Threat Intelligence at security firm Blackpoint Cyber. Due to CrowdStrike’s extensive customer base, the impact was felt globally.

“One mistake has had catastrophic results. This highlights how intertwined modern society is with IT — from coffee shops to hospitals to airports, a mistake like this has massive ramifications,” Hyatt noted.

The content update affected CrowdStrike’s Falcon monitoring software, which is designed to detect malware and other malicious behavior on endpoints like laptops, desktops, and servers. Falcon’s auto-update feature inadvertently rolled out buggy code, leading to widespread disruption. “Auto-update capability is standard in many software applications, and isn’t unique to CrowdStrike. It’s just that due to what CrowdStrike does, the fallout here is catastrophic,” Hyatt added.

Addressing the Fallout

Although CrowdStrike quickly identified and addressed the problem, restoring many systems within hours, the damage was not easily reversed for organizations with complex systems.

“We think it will take three to five days before things are fully resolved,” said Eric O’Neill, a former FBI counterterrorism operative and cybersecurity expert. He pointed out that the timing of the outage — a summer Friday — compounded the problem, with many offices empty and IT support scarce.

Lessons Learned: Incremental Updates and Better Safeguards

O’Neill emphasized the importance of incremental updates. “What CrowdStrike was doing was rolling out its updates to everyone at once. That is not the best idea. Send it to one group and test it. There are levels of quality control it should go through,” he said.

Peter Avery, Vice President of Security and Compliance at Visual Edge IT, echoed this sentiment, advocating for more rigorous testing in varied environments before widespread release. “You need the right checks and balances in companies. It could have been a single person that decided to push this update, or somebody picked the wrong file to execute on,” Avery said.

The IT industry terms this a single-point failure — an error in one part of a system causing a technical disaster across interconnected networks and industries.

Building Resilient IT Systems

The event has prompted calls for heightened cyber preparedness among companies and individuals. “The bigger picture is how fragile the world is; it’s not just a cyber or technical issue. There are many phenomena, like solar flares, that can cause an outage,” Avery said.

Javad Abed, an assistant professor of information systems at Johns Hopkins Carey Business School, emphasized the need for businesses to build redundancy into their systems. “A single point of failure shouldn’t be able to stop a business, and that is what happened. You can’t rely on only one cybersecurity tool,” Abed said. While building redundancy is costly, the alternative — as evidenced by the recent outage — is more expensive.

“I hope this is a wake-up call, and I hope it causes some changes in the mindsets of business owners and organizations to revise their cybersecurity strategies,” Abed added.

Kernel-Level Code and Systemic Challenges

Nicholas Reese, a former Department of Homeland Security official and instructor at New York University’s SPS Center for Global Affairs, highlighted the systemic issues within enterprise IT. Often, cybersecurity, data security, and tech supply chains are viewed as “nice-to-have” rather than essential.

On a micro level, the disruptive code was kernel-level, affecting every aspect of computer hardware and software communication. “Kernel-level code should get the highest level of scrutiny,” Reese said, advocating for separate approval and implementation processes with strict accountability.

The broader challenge lies in managing third-party vendor products, all with potential vulnerabilities. “How do we look across the ecosystem of third-party vendors and see where the next vulnerability will be? It is almost impossible, but we have to try,” Reese said. He stressed the importance of backup and redundancy, acknowledging the difficulty businesses face in justifying the cost of preparing for hypothetical scenarios.

The CrowdStrike incident underscores the critical need for robust risk management and contingency planning. As the industry moves forward, balancing regulation with market-driven solutions will be key to enhancing the security and resilience of global IT infrastructures.