Microsoft is making moves to bring back the Recall feature in Windows 11, a tool that caused a lot of concern when first introduced due to security and privacy risks. After being shelved for a while, Microsoft has now shared its plans to reintroduce Recall in November for Copilot+ PCs, but with extra safety measures to address the initial backlash.

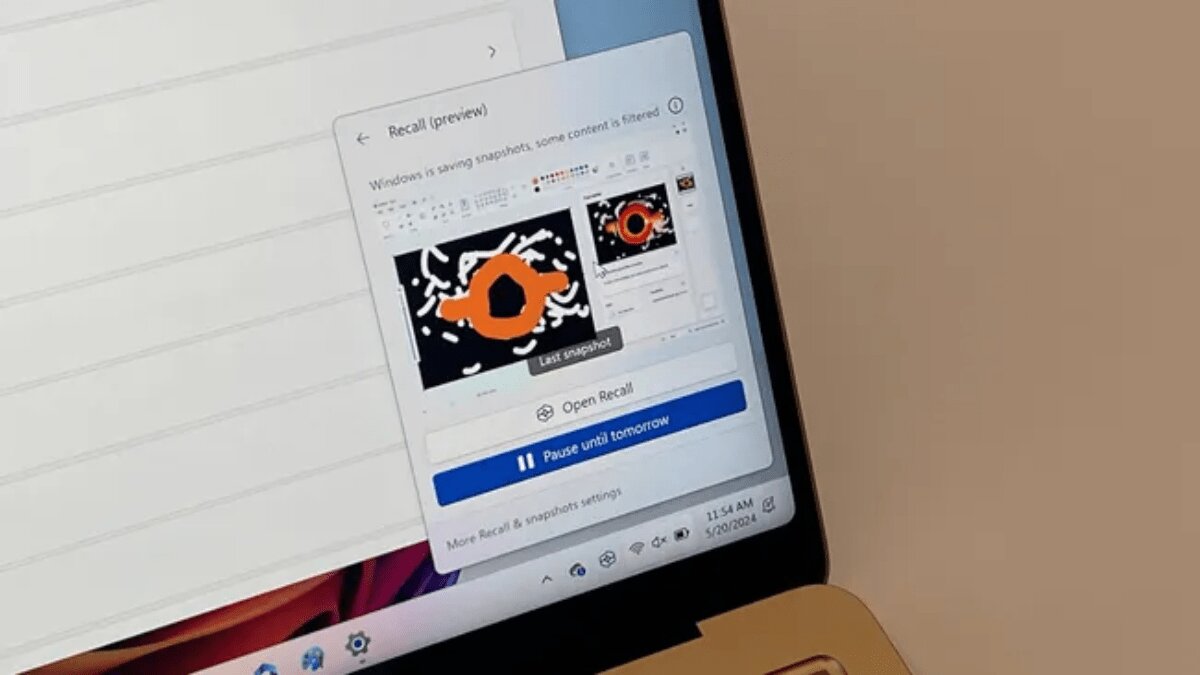

According to a statement reported by the BBC, the Recall feature will make its comeback with a focus on security. Microsoft detailed these measures in a blog post, aiming to ease worries about how the feature works. But what is Recall, and why did it raise eyebrows? Recall is an AI-powered tool that allows users to search their PC using automatically taken screenshots—or “snapshots”—of what’s happening on their screen. While it sounds useful, the idea of taking constant screenshots worried many users about potential privacy breaches.

Key Changes to Recall’s Security:

One of the biggest updates is that Recall will no longer be automatically enabled. Users must choose to turn it on during setup, ensuring no accidental use of the feature. In the past, it was turned on by default, leading to concerns that people unfamiliar with the feature might unknowingly expose sensitive information.

Microsoft emphasized that during the setup of Copilot+ PCs, users will have a clear option to opt into Recall. If they don’t actively enable it, Recall remains off, and no screenshots will be taken or saved.

Further security measures include encrypting all data related to Recall, including the screenshots. On top of that, users must authenticate with Windows Hello (like using a fingerprint or face recognition) to access Recall, ensuring only the owner can view the saved snapshots.

Another layer of protection comes from using what’s called a Virtualization-based Security Enclave (VBS Enclave). This is essentially a secure virtual machine within the system that isolates Recall’s operations from the rest of Windows, ensuring even an admin on your PC can’t snoop around. Even Microsoft itself can’t access your Recall data, as it’s all stored locally, not in the cloud.

Addressing Privacy Concerns:

One major worry with Recall was the possibility of it capturing sensitive information, like banking details or passwords. Microsoft has tackled this by implementing filters that block Recall from capturing sensitive data such as passwords and credit card numbers. Additionally, users can exclude specific apps or websites from being recorded by Recall, and any activity in private browsing modes (like Chrome’s Incognito) will not be screenshotted.

If a snapshot is taken, an icon will pop up on the taskbar, letting users know that a screenshot is being saved. Users can also pause or stop the screenshots from there if needed.

Analysis: Has Microsoft Done Enough?

Microsoft has clearly rethought its approach to Recall’s security and privacy, and most of these changes deserve praise—especially the encryption and the fact that the feature is now opt-in. However, it’s surprising that such crucial safeguards weren’t part of the feature from the beginning.

For those still unsure about Recall, the good news is that it’s entirely optional. You don’t have to use it, and with it turned off by default, there’s no risk of accidentally enabling it without fully understanding how it works.

Recall is set to return for testing in October and will be rolled out to Copilot+ laptops in November. However, it will still be labeled as a ‘preview’ feature, meaning it’s not fully finished. If you’re unsure whether to use it, it might be best to wait until it’s more polished.

Microsoft is talking a big game about improving Recall’s security, but the real test will come once users and testers get their hands on it. If any new issues emerge during testing, we could see the release delayed further. For now, though, Microsoft appears to be taking the concerns seriously and is working to avoid the missteps of the past.