Microsoft’s Copilot is set to undergo a significant transformation, with plans for it to operate locally on AI PCs in the near future. This advancement was confirmed during Intel’s AI Summit in Taipei, where Todd Lewellen, VP of Intel’s Client Computing Group, revealed that the next generation of AI PCs will necessitate an NPU capable of achieving 40 TOPS (Tera Operations Per Second) in processing power for AI tasks.

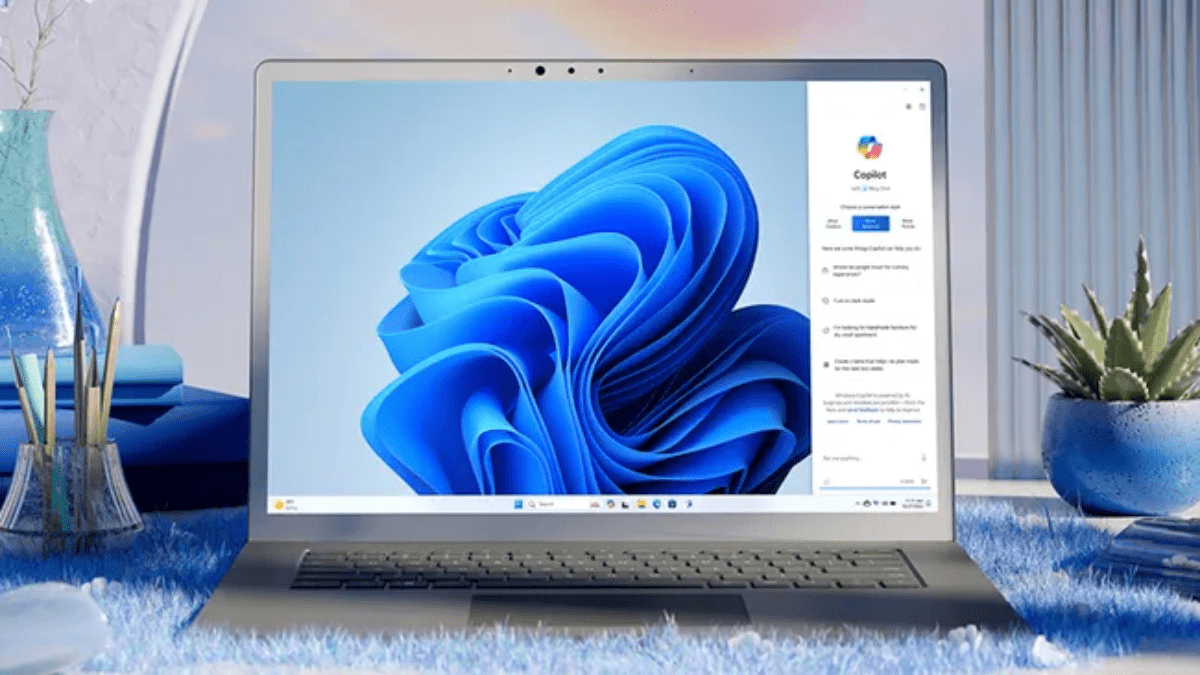

The shift towards local processing on AI PCs represents a notable departure from the current reliance on cloud-based computing for AI assistance. With Copilot expected to run locally on these devices, users can anticipate faster response times and reduced dependence on internet connectivity, resulting in a more seamless user experience.

Running Copilot locally offers several advantages, including enhanced speed and improved security. By avoiding the need to transmit data over the internet, users can mitigate potential privacy risks associated with cloud-based AI services. Additionally, local processing ensures a more reliable performance, particularly in scenarios where internet connectivity may be limited or unreliable.

The move towards local AI processing also aligns with broader industry trends, as evidenced by the increasing emphasis on powerful NPUs in upcoming devices like the Surface Pro 10, equipped with the Snapdragon X Elite chip boasting 45 TOPS of processing performance. This shift underscores the industry’s commitment to delivering cutting-edge AI capabilities directly to users’ devices, rather than relying solely on cloud infrastructure.

In addition to the hardware requirements for AI PCs, Intel has hinted at the inclusion of a dedicated Copilot key on the keyboard, as well as a potential requirement for 16GB of system RAM. While these specifications have yet to be officially confirmed, they signal a concerted effort to optimize AI performance and user experience on next-generation computing devices.